The AI Babel Fish? MCP, PocketFlow, and Powerful AI

What is the Babel Fish Moment for AI?

In The Hitchhiker's Guide to the Galaxy, there’s the Babel Fish. When you pop it in your ear, you can instantly understand any language spoken across the universe.

Our AI universe needs the Babel Fish. We have powerful LLMs, that capable of complex reasoning and conversation. However, they are often limited to text prediction; they cannot inherently execute actions in the real world - like sending an email, booking a flight, or even performing reliable calculations.

To unlock their potential in the real world, LLMs need to connect with external tools, databases, and APIs. But here lies the challenge: each tool often speaks its own unique dialect and this requires bespoke integrations. It is no question that having to connect multiple tools is a significant engineering feat.

This is where the Model Context Protocol (MCP) enters the stage. It's not just another protocol; it's potentially the closest thing we have to an AI Babel Fish – a standard designed to become the universal translator for AI-tool interaction.

Let’s dive a bit more into MCP’s significance and the way MCP can work with internal workflow orchestration frameworks like Pocket Flow for communication.

Why MCP? Tackling the Tool Integration Challenge

The need for something like MCP stems directly from the limitations of LLMs and the complexities of current integration practices:

LLMs Need Tools to Act: As noted, LLMs excel at language but lack inherent capabilities for tasks requiring external interaction or precise execution (like math, current data retrieval, or API calls).

Bespoke Integrations are Complex: Currently, connecting an LLM to even a single tool (like a search engine) requires custom code. Integrating multiple tools, each with different APIs and authentication methods, quickly becomes unwieldy and brittle.

Scalability Issues: This complexity makes it hard to build sophisticated AI assistants that can leverage a wide array of tools seamlessly. The engineering effort for each new tool is substantial.

MCP aims to directly address these points by standardizing the communication layer.

At its technical core, MCP provides a structured format enabling AI models (or the applications hosting them) to:

Discover Tools: Programmatically understand what external capabilities are available.

Invoke Tools: Send standardized requests (specifying

toolNameandarguments) to these tools.Receive Results: Interpret structured responses (containing

resultorerror).

TypeScript

// Example MCP Request Payload

{

"toolName": "add",

"arguments": { "a": 5, "b": 10 }

}

// Example MCP Success Response Payload

{ "result": 15 }

But MCP is more than just an API spec. It aspires to be the universal translator, the Babel, between AI reasoning and external action.

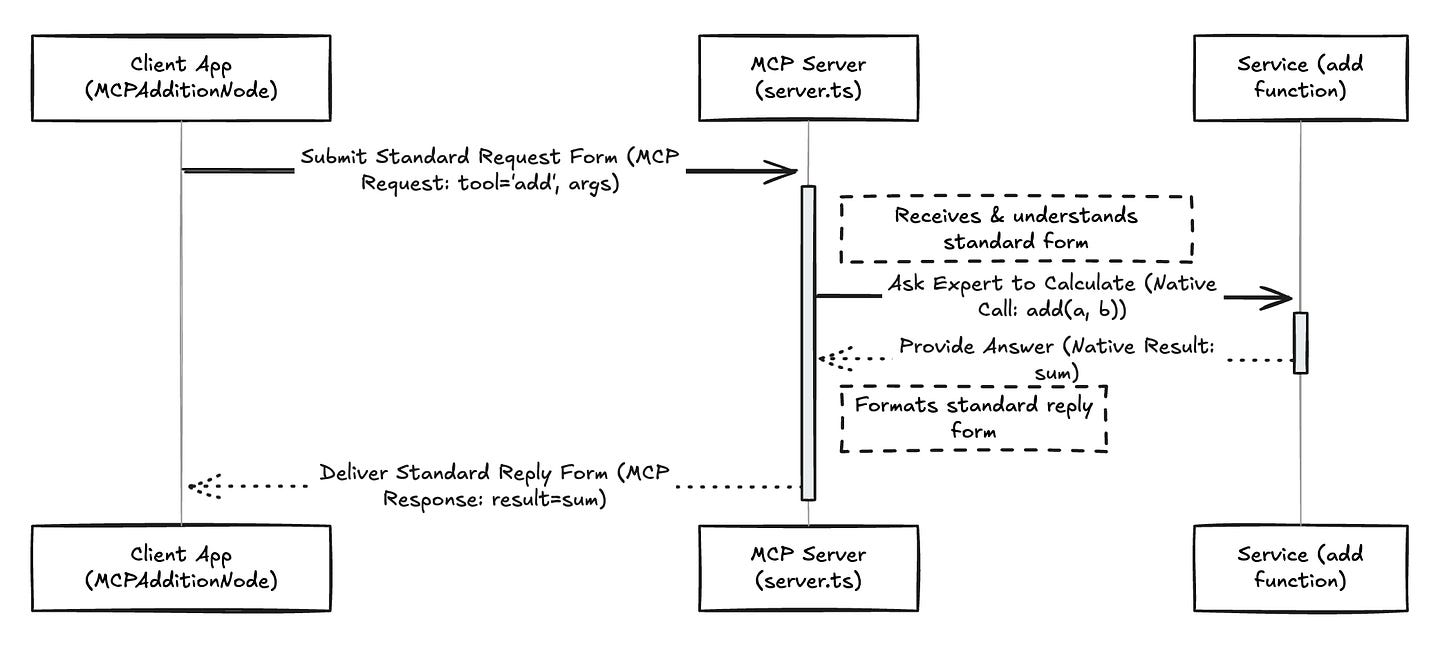

The Key Components Involved in Making MCP Work

MCP Client: User-facing applications or agent systems that leverage LLMs and need to interact with external tools (e.g., AI assistants, data analysis platforms, workflow automation tools).

Protocol: The MCP standard itself – defining the structure of requests and responses.

MCP Server: A crucial translation layer, typically built and maintained by the Service Provider. It receives requests in the standard MCP format from the Client and translates them into commands the underlying Service can understand. It also formats the Service's response back into the standard MCP format.

Service: The actual tool or capability being exposed (e.g., a database, a search engine API, a calculation engine, an email service).

This structure smartly distributes the development burden: service providers focus on creating MCP Servers for their specific services, while client developers can interact with any MCP-compliant service using the same standardized protocol.

MCP's Potential

We believe MCP could evolve significantly.

Much like API Gateways in microservices, MCP Servers could evolve to handle standardized authentication, authorization, schema validation, versioning, observability, and policy enforcement for AI tool usage.

Moreover, we believe there is great potential for MCP with workflow orchestration frameworks like Pocketflow. This would address the question: While MCP standardizes external communication, what will manage the internal logic of the MCP Client application or agent?

The Pocketflow Framework

PocketFlow allows developers to define complex workflows as a series of nodes, managing state, handling logic (like branching based on results), and orchestrating the sequence of actions – including calls to local functions or calls to external tools via MCP.

In other words, while MCP focuses on how the client talks to one external tool at a time, Pocketflow defines the internal workflow orchestration within the client application. It dictates when and why to communicate.

Analogy & Practical Example

You are a Traveler following an Itinerary (your PocketFlow workflow). Your goal requires getting specific services (like a calculation) from various Service Stations (external tools).

MCP: Is your combination of:

A Universal Translator device/service.

A set of Standard Request/Reply Forms. Everyone agrees to only use these forms for specific tasks when communicating via the Translator.

MCP Server: A Local Translator working at a specific Service Station (like the "Calculation Station"). They only accept Standard Forms submitted via the Universal Translator system. They use their local knowledge to translate the form for the Local Expert, get the answer, and fill out the Standard Reply Form to send back via the Universal Translator system.

Service (e.g.,

addfunction): The Local Expert (e.g., Mathematician) at the Service Station who does the actual work when asked by their Local Translator.Local Function: You, the Traveler, doing the calculation yourself using your own knowledge/calculator, without needing the Translator or Forms.

Now let’s write some code.

1. Starting the Journey (run.ts):

This is you, the Traveler, starting your trip. You get your initial data (

sharedState) and check your instructions (mode) – will this step require using the Universal Translator system, or can you handle it yourself? You then activate your Itinerary (flow.run).Code:

// run.ts (Simplified Snippet - Traveler Starting the Trip)

// ... (Setup, Imports) ...

const mode: AdditionMode = /* 'mcp' or 'local' */; // Check instructions: Use Translator or Self?

const sharedState: SharedState = { num1: 5, num2: 10 }; // Initial data for the trip

const flow = createAdditionFlow({ mode }); // Prepare the Itinerary based on instructions

await flow.run(sharedState); // Begin following the Itinerary

console.log(`Result (${mode}): ${sharedState.result}`); // Report final result of the trip

This script sets up the initial numbers, determines the

mode, creates the appropriate PocketFlowflow(the Itinerary) viacreateAdditionFlow, and executes the workflow.

2. Consulting the Itinerary (AdditionFlow.flow.ts):

This is your Itinerary (

createAdditionFlow). It checks themodeinstruction and dictates the first major step: either prepare to use the Universal Translator system and its Standard Forms (new MCPAdditionNode()) or get your own calculator ready (new LocalAdditionNode()).Code:

// flows/AdditionFlow.flow.ts (Snippet - The Itinerary Logic)

export function createAdditionFlow(config: AdditionFlowConfig): Flow {

let startNode: BaseNode = (config.mode === 'mcp')

? new MCPAdditionNode() // Itinerary says: Use Translator system for this step

: new LocalAdditionNode(); // Itinerary says: Calculate this yourself

return new Flow(startNode); // Structure the start of the journey

}

This function configures the PocketFlow

Flowto start with the correct node based on the chosen mode.

3. Calculating It Yourself (LocalAdditionNode.node.ts):

This represents you deciding against using the external Station and simply performing the addition directly using your own calculator. No Translator or Forms needed.

Code:

// nodes/LocalAdditionNode.node.ts (Simplified Snippet - Traveler's Own Calculation)

export class LocalAdditionNode extends BaseNode {

async execCore(prepResult: LocalAdditionNodeState): Promise<LocalAdditionExecResult> {

// Perform calculation directly

const sum = prepResult.num1 + prepResult.num2;

return { sum };

}

// ... (Other methods) ...

}

Explanation: The

execCoremethod directly computes the sum locally.

4. Using the Universal Translator & Standard Form (MCPAdditionNode.node.ts):

Your Itinerary directs you to use the Universal Translator system. You:

Fill out the Standard Request Form (the

payloadobject withtoolNameandarguments).Send the form via the Universal Translator's communication channel (

axios.post) to the target Station's Local Translator address (endpoint).Receive the Standard Reply Form (

response) back through the Universal Translator system.

Code:

// nodes/MCPAdditionNode.node.ts (Simplified Snippet - Traveler Using Translator/Forms)

export class MCPAdditionNode extends BaseNode {

async execCore(prepResult: MCPAdditionNodeState): Promise<MCPAdditionExecResult> {

const endpoint = `${prepResult.mcpServerUrl || DEFAULT_MCP_URL}/mcp/call`; // Local Translator address

// Fill out Standard Request Form (MCP Request)

const payload = {

toolName: 'add',

arguments: { a: prepResult.num1, b: prepResult.num2 },

};

try {

// Send Form via Universal Translator channel

const response = await axios.post(endpoint, payload);

// Receive and process Standard Reply Form (MCP Response) via Translator

// ... (Check for errors: response.data.error) ...

return { sum: response.data.result };

} catch (error: any) { /* ... Handle communication failure ... */ }

}

// ... (Other methods) ...

}

This node handles formatting the MCP request (

payload- the Standard Form), sending it via HTTP (axios.post- using the Translator's channel), and processing the MCP response (response.data- the Standard Reply Form received via the Translator).

5. The Local Translator at the Calculation Station (server.ts):

This code represents the Local Translator working at the "Calculation Station" Help Desk. They:

Wait for Travelers submitting Standard Request Forms via the Universal Translator system (

app.post).Use their local knowledge (or internal translation matrix) to understand the standard form (

req.body).Ask the Local Expert (Mathematician - the

addfunction) to perform the task in the local "language".Fill out the Standard Reply Form (

res.json) using the standard protocol to send back through the Universal Translator system.

Code:

// server.ts (Simplified Snippet - Local Translator at the Station)

// ... (Imports, setup, add function - the Local Expert) ...

app.post('/mcp/call', (req: Request, res: Response) => { // Listening for Standard Forms via Translator System

const { toolName, arguments: args } = req.body; // Understand the Standard Form

try {

// Ask Local Expert based on Form content ('add')

const result = add(args.a, args.b);

// Fill out Standard Reply Form (MCP Response)

res.json({ result });

} catch (error: any) {

// Fill out Standard Error Reply Form

res.status(500).json({ error: error.message });

}

});

// ... (Start server - Open the Station's Help Desk for Translator communications) ...

This Express server implements the MCP endpoint. It receives requests formatted as standard MCP JSON, calls the local

addfunction, and returns the result in the standard MCP JSON response format for the Translator system to relay.

Let’s bring it together now.

MCP (the Translator + Standard Request/Reply Forms) provides the universal communication layer.

Pocketflow (the Traveler following an Itinerary) decides when to use this system versus handling things locally.

The MCP Server (Local Translator) is the essential component at each Service Station that makes the "magic" of understanding the standard requests and translating them for the Local Expert possible.

A More Connected AI Universe

The journey towards powerful AI requires solving the communication barrier between reasoning models and the vast world of digital tools and services. MCPs offers a compelling vision for a standardized solution – an AI Babel Fish enabling understanding between models and tools.

However, standardization alone isn't enough. Within the AI applications themselves, robust workflow orchestration, provided by frameworks like PocketFlow, is essential for managing complex sequences of tasks, handling state, and deciding when and how to leverage external capabilities via MCP.

Together, MCP and workflow orchestration provide a powerful paradigm: PocketFlow for the internal plan, MCP for standardized communication. And though the MCP standard is still maturing, understanding its principles and how it interacts with workflow management prepares us for building the next generation of integrated and truly helpful AI systems.

Join our waitlist to build workflows on our platform and if you are a developer, make sure to play with our framework! We just enabled MCP communication: a server that provides a tool via MCP, and a client (within the Pocket Flow Framework) that calls that tool using the MCP protocol.